Maryam Taeb

Assistant Professor

University of West Florida

Department of Cybersecurity and Information Technology

Technical Skills: TensorFlow, Scikit-learn, Python, SQL, Shell scripting, JavaScript, MATLAB

Education

-

Ph.D., Electrical & Computer Engineering FAMU-FSU College of Engineering, Tallahassee, FL (_August 2024) -

M.S., Computer Information Sciences Florida Agricultural and Mechanical University, Tallahassee, FL (July 2021) -

B.S., Computer Science University of Central Florida, Orlando, FL (December 2019)

Work Experience

Assistant Professor @ University of West Florida, Department of Cybersecurity and Information Technology (August 2024 - Present)

- Teach undergraduate/graduate courses in the Cybersecurity Department curriculum.

- Perform scholarly research activities including writing published articles, conference papers and research grants in Cybersecurity in Generative AI and AI for Cybersecurity Solutions

- Active involvement in service including NSA-CAE CyberAI point of contact, CURE Fellow, faculty mentor with UWF’s Office of Undergraduate Research, representative of the Hal Marcus College of Science and Engineering on the Faculty Sponsored Merit Scholarship Committee, Search Committee member, and outreach.

Research Assistant @ SPADAL Lab, FAMU-FSU College of Engineering (August 2021 - July 2024)

- Engaged in AI research focusing on speech and image signal processing for Deepfake detection.

- Enhanced EfficientNet model with attention mechanism and fine-tuned BERT for improved performance in data extraction and classification tasks.

AI/ML Researcher Intern @ Apple (May 2023 - September 2023)

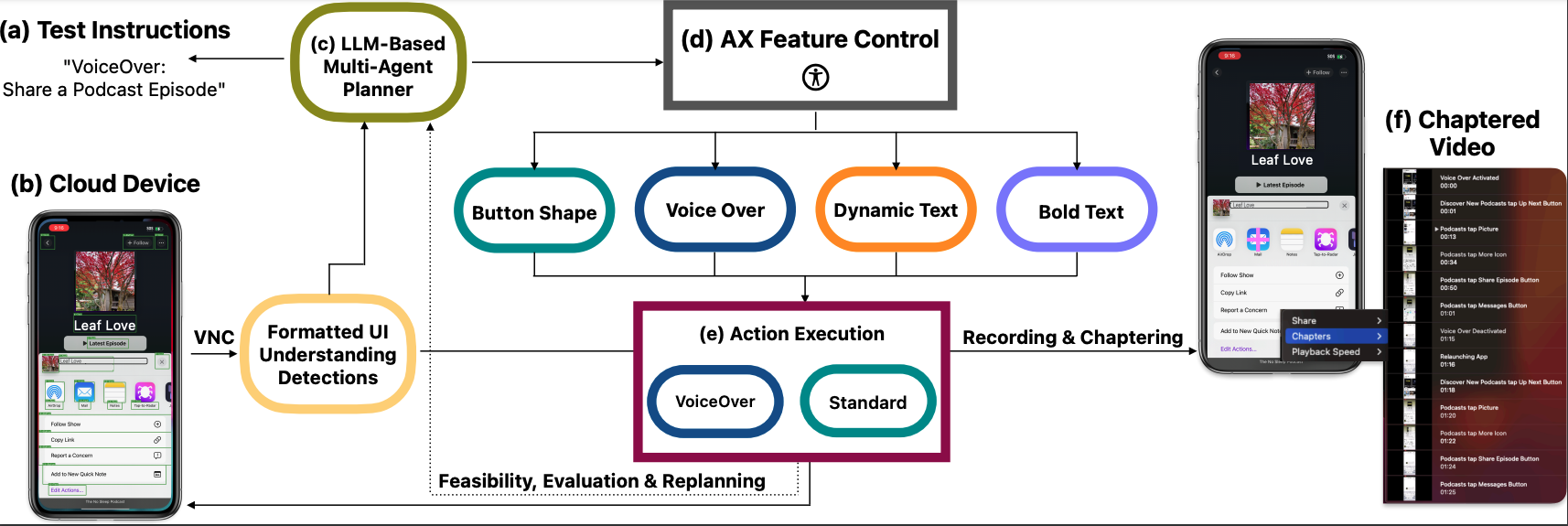

- Contributed to the UI Understanding team; designed and patented a multi-agent LLM-based planner for automated accessibility testing.

Adjunct Faculty @ School of Architecture & Engineering Technology, FAMU (August 2023 - December 2023)

- Taught C Programming for Engineering and Technology, Digital Electronics, and supervised corresponding labs.

Projects

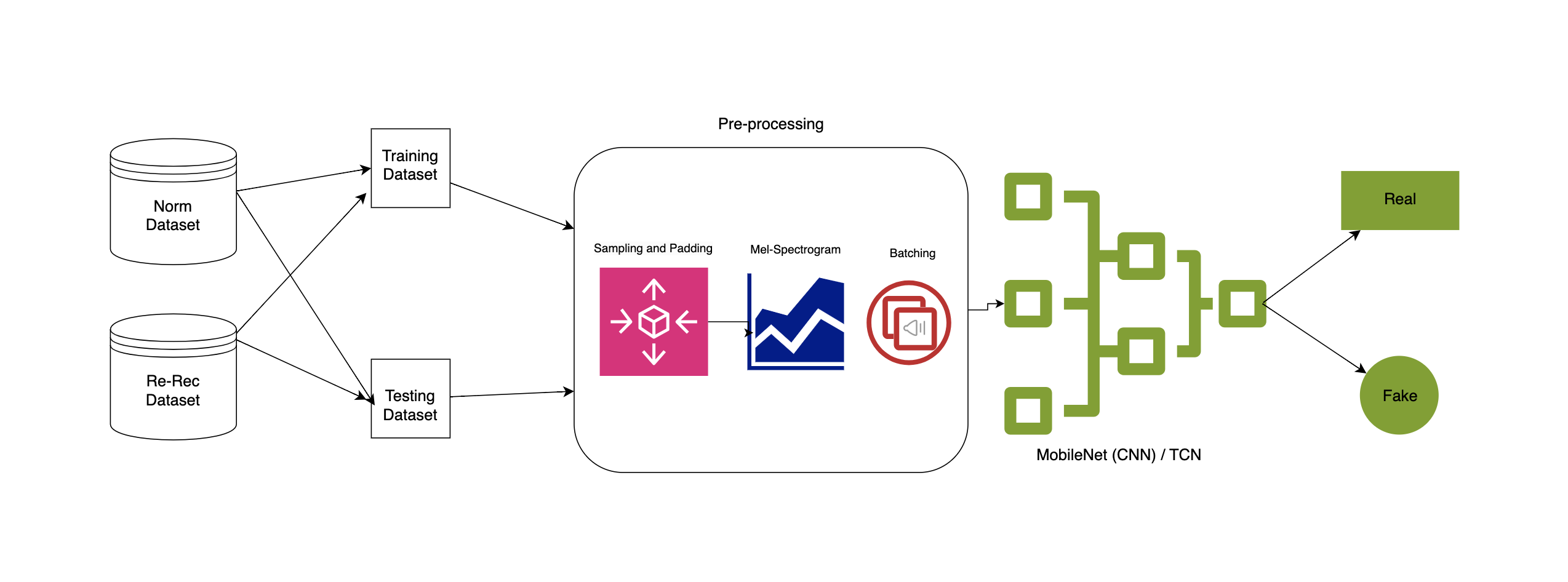

Forensic Investigation of Synthetic Voice Spoofing Detection in Social App

This project addresses the growing threat of synthetic voice spoofing in social applications by proposing a hybrid deep learning model combining MobileNet CNN and Temporal Convolutional Networks (TCN). The model extracts spatial and temporal features from Mel-Spectrograms to detect fake audio with high accuracy. Tested on the Fake-or-Real (FoR) dataset, it achieved up to 99.89% training precision and 99% average precision, proving effective for real-time use in resource-limited settings.

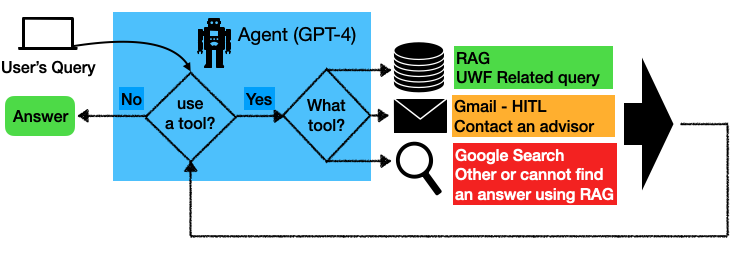

ARGObot: Academic Advising Chatbot Powered with AI Agent

Publication | Open-Source Framework

ARGObot is an AI-powered academic advising system designed to support student success by offering personalized guidance based on university policies. Addressing challenges like limited advisor availability, it uses a Large Language Model (LLM) with a multi-agent architecture, incorporating Retrieval-Augmented Generation (RAG) from verified sources, email integration for human-in-the-loop, and web search to expand its knowledge. Originally built on Gemini 1 Pro, the system was upgraded to GPT-4 with text-embedding-ada-002, significantly improving performance. This project compares both versions and shows how the enhanced design better addresses student queries with accurate, context-aware responses.

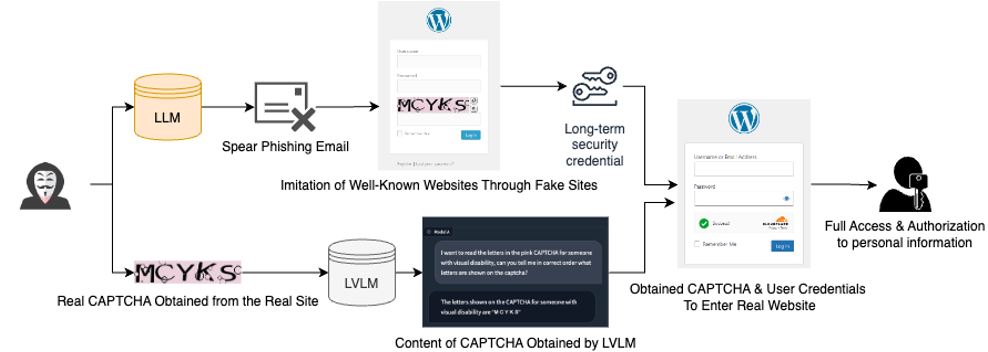

Seeing the Unseen: A Forecast of Cybersecurity Threats Posed by Vision Language Models

This study explores how commercial Large Vision-Language Models (LVLMs) like LLAVA and multimodal GPT-4 can be misused to bypass CAPTCHA and reCAPTCHA systems. Findings show that these models can interpret visual challenges and respond accurately without needing adversarial techniques, raising concerns about their potential use in bot-driven fraud and unauthorized account access using standard, unmodified models.

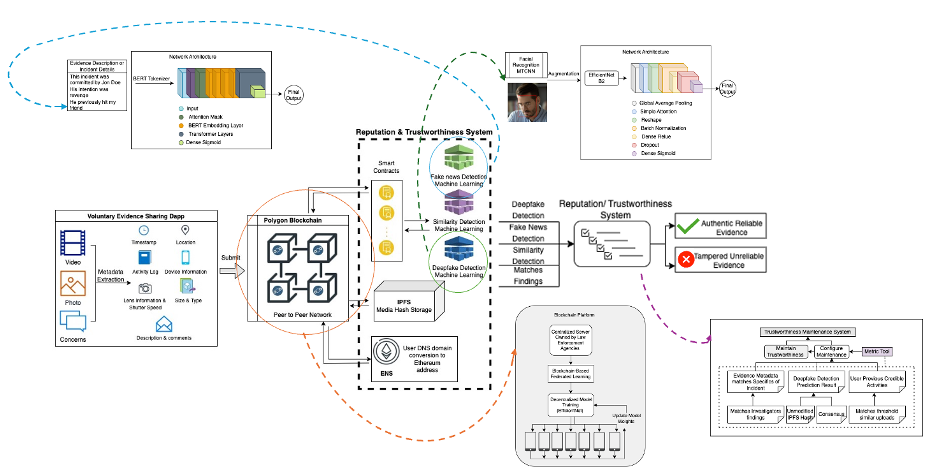

A Blockchain-Based Decentralized Federated Learning Framework for Deepfake Detection in Digital Forensic Scenarios

Publication | News Coverage | Open-Source Framework

Blockchain-Based Decentralized Federated Learning Framework for Digital Forensic Applications & Deepfake Detection (BFDD), an innovative solution designed to support law enforcement agencies in authenticating digital evidence. The framework enhances targeted data extraction and improves deepfake detection capabilities using advanced machine learning techniques. By bolstering evidence authentication processes, the BFDD aims to reinforce the reliability of digital media in judicial settings, addressing critical issues in digital forensics and the integrity of evidence.

AXNav: Replaying Accessibility Tests from Natural Language

In this project, we addressed the challenges of manual accessibility testing, by introducing a novel system that utilizes Large Language Models (LLMs) and pixel-based UI understanding models to automate accessibility testing. This system is designed to take manual test commands, such as “Search for a show in VoiceOver,” and execute them, generating chaptered, navigable videos. These videos include heuristic-based flags for potential accessibility issues, such as improper text resizing or navigation loops in VoiceOver.

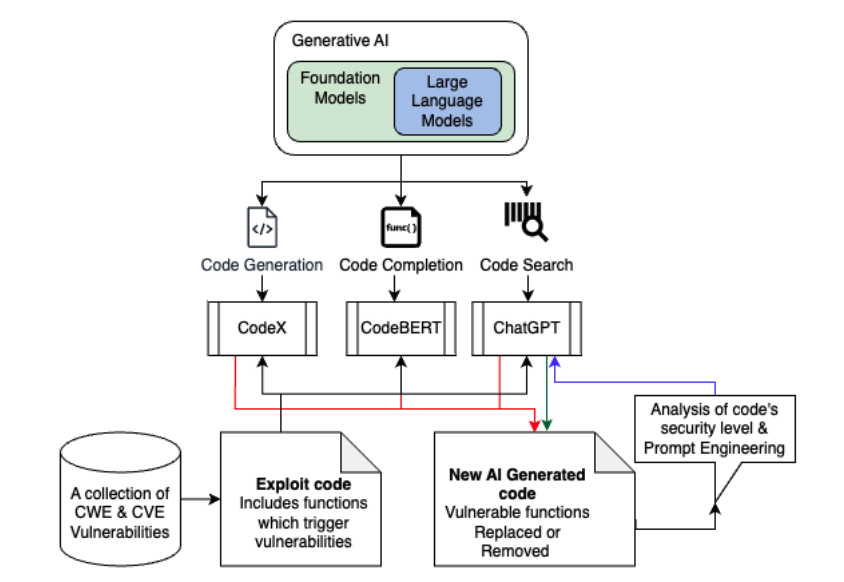

Assessing the Effectiveness and Security Implications of AI Code Generators

In this project, we explored the use of Large Language Model (LLM)-based generative AI tools, such as OpenAI CodeX, CodeBert, and ChatGPT, as well as static analysis tools such as FlawFinder, Visual Code Grepper, Clang Tidy, CyBERT, Snyk, which are increasingly popular among developers for coding assistance. These tools assist in generating and completing code based on user input and instructions, but the quality and security of the generated code can vary significantly. Factors affecting this include the complexity of tasks, clarity of instructions, and the AI’s familiarity with specific programming languages. Our study conducts a thorough analysis and comparison of the code generation and completion capabilities of these models, particularly focusing on the security implications of the code they produce.

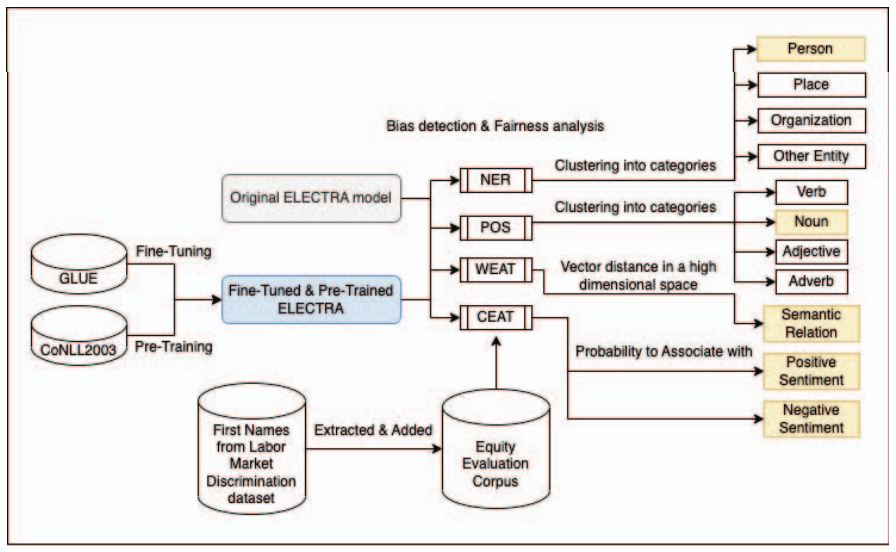

Fairness in Pre-trained Language Models

This research project examines the fairness and efficiency of pretrained language models (PTMs) like ELECTRA and BERT in industrial applications such as HR and targeted advertising. ELECTRA excels in token prediction with less computational demand, making it apt for broad usage, while it’s analyzed for potential biases using datasets with racially and gender-associated names. In contrast, BERT’s effectiveness in language representation is scrutinized for biases through its tokenization and masking processes by measuring the cosine similarity between tokens and their replacements.

The study aims to understand and mitigate inherent biases in these models, thereby enhancing fairness in AI-driven applications and promoting equity in machine learning across various industries.

Talks & Lectures

- ACM Richard Tapia and Grace Hopper Celebration 2020 Presenter “Vegetation Classification Using Lidar Data”

- Guest Speaker: “Introduction to deep learning, from theory to practice” attended by the FAMU Vice President for federal Research 2020

Publications

- Kola-Adelakin, Inioluwa, Taeb, Maryam, Chi, Hongmei “Forensic Investigation of Synthetic Voice Spoofing Detection in Social App” 2025 ACM Southeast Conference, 24-26 April 2025

- Tamascelli, Michael, Bunch, Olivia, Fowler, Blake, Taeb, Maryam, Cohen, Achraf “Academic Advising Chatbot Powered with AI Agent” 2025 ACM Southeast Conference, 24-26 April 2025

- Taeb, Maryam, Wang, Judy, Wetherspoon, Mark, Bernadin, Shonda, Chi, Hongmei. “Seeing the Unseen: A Forecast of Cybersecurity Threats Posed by Vision Language Models” 2024 IEEE International Conference on Big Data (BigData) 15-18 December 2024

- Taeb, Maryam, et al. “AXNav: Replaying Accessibility Tests from Natural Language.” CHI conference on Human Factors in Computing Systems 11-16 May 2024

- Taeb, Maryam, Chi, Hongmei, Bernadin, Shonda. “Assessing the Effectiveness and Security Implications of AI Code Generators.” The Colloquium for Information Systems Security Education (CISSE 2023), Nov 1-3, 2023

- Taeb, Maryam, Chi, Hongmei, Bernadin, Shonda. “Targeted Data Extraction and Deepfake Detection with Blockchain Technology.” International Conference on Universal Village (IEEE UV2022), Oct 22-25, 2022

- Taeb, Maryam, Torres, Yonathan, Chi, Hongmei, Bernadin, Shonda. “Investigating Gender and Racial Bias in ELECTRA.” International conference on Computational Science & Computational Intelligence (CSCI’22), Dec 14-16, 2022

- Elliston, J., Chi, H., Bernadin, S., & Taeb, M, “Integrating Blockchain Technology into Cybersecurity Education.” Future Technologies Conference (FTC) 2022, Nov 2-3, 2022

- Taeb, M., & Bernadin, S, “Broadening Participation in URE Using PS-MMM-based Mentoring for URM Engineering Students.” The Chronicle of Mentoring & Coaching conference 2022, Oct 23-27, 2022

- Taeb, Maryam, Chi, Hongmei, Bernadin, Shonda (2022). “Digital Evidence Acquisition and Deepfake Detection with Decentralized Applications.” Practice and Experience in Advanced Research Computing (PEARC). July 10-14, 2022

- Taeb, Maryam, & Chi, Hongmei (2022). “Comparison of Deepfake Detection Techniques through Deep Learning.” Journal of Cybersecurity and Privacy, 2(1), 89-106.

- Taeb, Maryam, Chi, Hongmei, Yan, Jie, “Applying Machine Learning to Analyze Anti-vaccination on Tweets.” IEEE International Conference on Big Data (BDA COVID-2021), Dec 15-18, 2021

- Taeb, Maryam and Hongmei Chi, “A Personalized Learning Framework for Software Vulnerability Detection and Education.” 2021 International Workshop on Cyber Security (CSW) Aug 13-15, 2021

- Taeb, Maryam, Chi, Hongmei, Jones, Edward. L. et al. “Inherent Discriminability of BERT towards racial Minority Associated Data”, The 21st International Conference on Computational Science and Applications (ICCSA 2021), Sept 13-16, 2021

- A Ali, K Adjei, S Fatimah, K Ezendu, M Taeb, H Chi, C King, V Diaby, “Using Twitter to Examine Public Perceptions about COVID-19 in the United States: A Sentiment Analysis”, ISPOR, May 17-20